Using simulation as an efficient and safe way towards autonomy in subsea operations

Autonomous and unmanned systems are the next generation of tech arriving into subsea operations. They bring the promise of making subsea operations less expensive, increasingly safer, and reducing our carbon footprint while bringing people onshore. Industries such as Oil & Gas and Ocean Renewables are pushing the envelope when it comes to converting these technologies from paper to a new reality.

But this is no easy task; it takes several players working together in order to build the various pieces of the puzzle. The cost of development of these new systems is considerable and, when involving multiple organizations, might be somewhat slower. From designing a new underwater vehicle, then the first time it enters the water, through to the readiness for commercialization – there is a lengthy process that requires significant effort and coordination.

Subsea Is Complex

Achieving full autonomy in a subsea operation is no small feat, and mixing in the push for remote operations doesn’t help. The conditions are tough and different scenarios might require creative approaches. Even though there have been multiple developments that take underwater communications to the next level, it is not something that we can take for granted down there.

What happens when an expensive vehicle that’s still undergoing development experiences a mechanical fault underwater? Surely, we don’t want to discover that in the middle of the ocean. Moreover, there are all sorts of unpredictable faults that can be triggered throughout the lifetime of the vehicles in these demanding environments, which are hard to replicate in a testing pool. Using simulation could prove invaluable in trying to make the vehicle’s behavior in such conditions more predictable.

Virtualizing Testing Environments

This is not a new problem. Autonomous road vehicles have been making significant progress in the last few years, and a key factor for success was to literally just “drive” countless kilometers virtually. The problems that both industries face are similar; testing these new technologies in the real world is both hard and expensive. Simulation though aids in achieving the end goal while reducing costs and increasing efficiency.

At Abyssal, we believe that simulation plays a crucial role in speeding up development and iteration of autonomous systems. However, bespoke simulators and software sandboxes built for testing new vehicles are often a black box crunching numbers without the context of the dynamic environments that exist in the real world. Testing the vehicle assembly and systems this way is useful, but generally for autonomous vehicles it’s far from being enough.

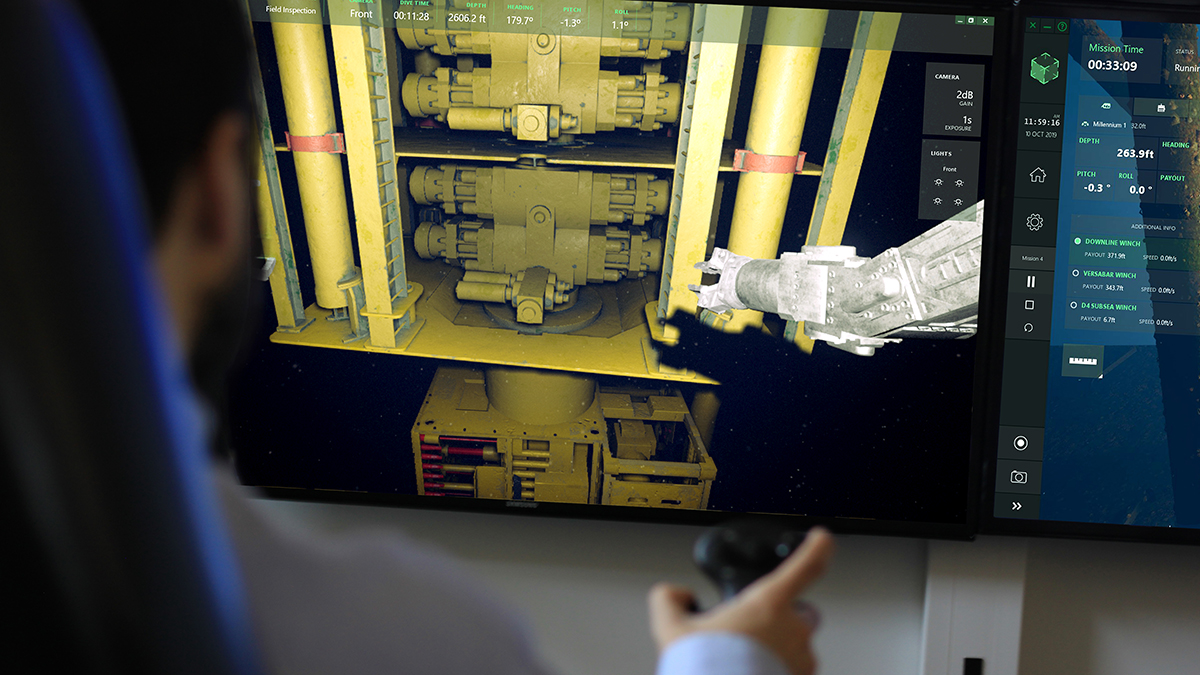

Our Simulator allows for easy integration with hardware devices for piloting and interaction such as a chair or a master controller.

We are continuously building tools to easily create realistic dynamic environments, coupled with a survey-grade geographical information system and plausible or extreme conditions, mimicking the real world as closely as possible. The goal is to let engineers model their new vehicles in a high-fidelity simulated scenario and be able to check how the vehicles, sensors, and control units perceive as well as act on their surroundings.

Materializing the Concept

We recently worked together with a manufacturer of a new Underwater Intervention Drone, creating a system to control the vehicle over the cloud using our Simulator as a virtual testing environment.

We started by importing the vehicle model from its CAD data, modelled its physics, actuators, such as thrusters, cameras, and other sensors necessary for its correct functioning. Within a couple of hours – though depending on the vehicle’s complexity it can take up to a few days – we were able to pilot the vehicle within a high-fidelity physics-based simulation. We conducted initial hydrostatic and hydrodynamic behavioral tests and eventually identified problems in the chassis.

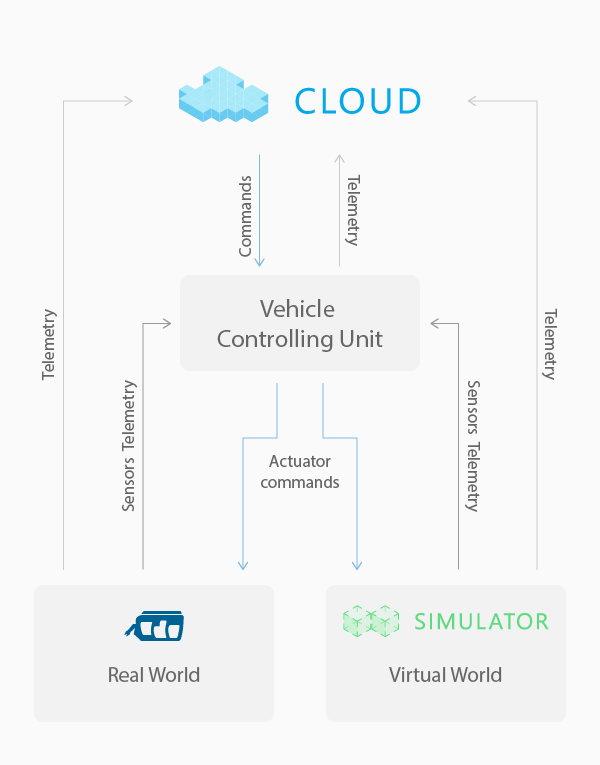

We then used Abyssal Simulator’s networking API to write a small publish-subscribe communication layer between our simulation and the vehicle controlling unit software. The simulation outputs telemetry for sensors and world state while the control unit sends the desired state for the different actuators. The vehicle engineers could now use their own controlling Human-Machine Interface (HMI) to execute autonomous commands. The results can be immediately observed in a 3D environment in an easy and intuitive way and, more importantly, with the full context of the surroundings. This allows for faster iteration when it comes to the development of autonomous software while facilitating the debugging of the complex systems with contextual information.

We can also emulate sensor data channels and stream wherever needed. For instance, we can stream the different vehicle cameras’ rendered feeds or sonar data. And that was exactly what we did. We published the vehicle telemetry to our Offshore suite and streamed the camera feeds into Abyssal Cloud. That allowed us to control the simulated vehicle over the cloud from our operation monitoring software. But that’s something we’ll take a look at more in depth in a future post.

The Simulator emulates the vehicle and a realistic environment in seamless way, shifting the development focus to the Vehicle Controlling Unit.

Having a modular architecture like this one makes replacing the simulated vehicle with a physical one in the real world simply a matter of flipping some switches and adjusting some configurations. There is no need to maintain a different software stack for each environment. Moreover, the modular nature of this setup allows us to simulate custom scenarios across the entire stack, such as faulty sensors and actuators, communication failures, and fiddle with the data’s integrity as well as consistency. This can prove invaluable in the development of complex and robust solutions that would otherwise have to be replicated in the real world, which can be both risky and costly.

With connection to the Offshore suite (left) through Abyssal Cloud, the Simulator (right) allows to test the systems end-to-end.

With this toolset we can design, train, test, and validate the decisions that are made by the autonomous systems in a safe way. We can easily generate new testing scenarios and the ground-truth data corresponding to optimal decisions, in order to compare the performance of different systems or approaches. This helps speed up and streamline the development, focusing on the important tasks, and shortens the time to market of these new emergent technologies.

Beyond Autonomous Vehicles

Autonomy is not just pre-programming behaviors that are automatically executed when certain conditions are met. Subsea environments are dynamic and unpredictable, which means that autonomy requires intelligent systems. Nowadays, machine learning is making its way into subsea to help tackle all sorts of difficult problems, not only for autonomous vehicles.

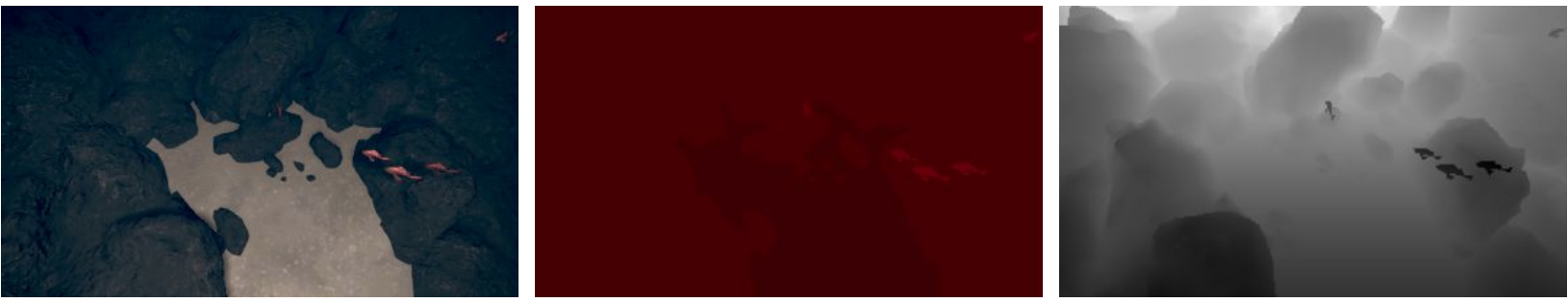

At Abyssal, our AI department is using our Simulator as a backbone for research activities in different domains. As training deep learning models requires a lot of data, our AI researchers can just plug into the networking API to control environment variables, gather large amounts of synthetic data, or improve models. For this use case, we can run headless simulations with sped-up tick rates for a faster and more convenient way of working. The high-quality data and imagery allows to train accurate models which can then be transferred and applied to real world applications. And, more importantly, we can test our AI models in dynamic, rare and unpredictable situations inside the simulator to make sure that our systems are safe and robust before deploying them.

Artificial training data obtained from a virtual underwater scenario generated by the Abyssal Simulator.

Conclusion

Autonomy and remote control from onshore in subsea operations is no more a matter of if, but when. These technologies will result in significant changes to the way subsea operations are carried out. We have built a platform to help tame this new reality, powering players of all kinds with a toolkit to test their innovations in sandboxed dynamic environments, allowing for increased safety and efficiency while reducing costs.

And this is the space for the regular sealy pun.

Cristiano Carvalheiro

Cristiano Carvalheiro holds a MsC in Informatics and Computing Engineering from the Faculty of Engineering of the University of Porto. His master thesis focused on Virtual Reality and novel ways to provide haptic feedback in limited spaces. During the studies, he also worked on making smart and self-sustainable homes a reality. Since then Cristiano joined Abyssal and has been leading the development in simulation and pushing the envelope on the roadmap to autonomous and unmanned vehicles in subsea operations.